数据准备

创建一个log文件

1

2

3

4

5

6vim test.log

welcome bigdata test hello

bigdata test hello

hello welcome

tab键分隔上传数据到hdfs

1

2

3

4

5

6

7

8

9

10# 创建测试数据文件夹

hadoop fs -mkdir /testData

# 将我们创建的log文件上传到hdfs上

hadoop fs -put test.log /testData/

# 检查一下上传成功了没

hadoop fs -text /testData/test.log

19/03/04 21:09:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

welcome bigdata test hello

bigdata test hello

hello welcome

Hive操作

启动hive并加载数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19- 创建表test_wc

create table test_wc(sentence string);

- 加载数据

load data inpath 'hdfs://hadoop001:8020/testData/test.log' into table test_wc;

Loading data to table default.test_wc

Table default.test_wc stats: [numFiles=1, totalSize=60]

OK

Time taken: 1.892 seconds

- 检查数据有没有加载到hive中

select * from test_wc;

OK

welcome bigdata test hello

bigdata test hello

hello welcome

Time taken: 1.165 seconds, Fetched: 3 row(s)执行SQL

1

2

3

4

5select word, count(1) c

from

(select explode(split(sentence,'\t')) as word from test_wc) t

group by word

order by c desc;查看执行过程

日志也显示了整个sql的执行过程

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44Query ID = hadoop_20190304211515_2a6673b2-f612-408d-abb4-70d6e8bb8263

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

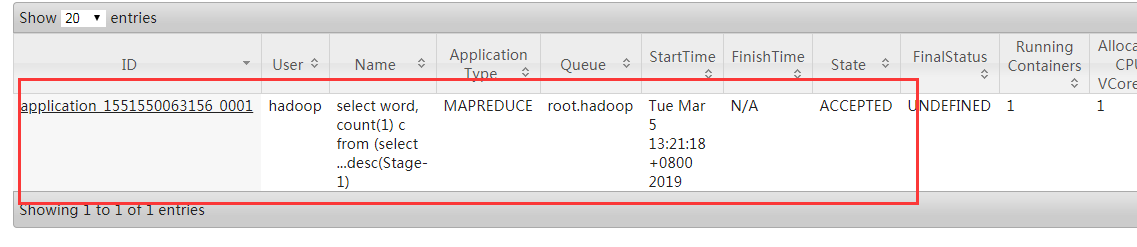

Starting Job = job_1551550063156_0001, Tracking URL = http://hadoop001:8088/proxy/application_1551550063156_0001/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1551550063156_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2019-03-04 21:22:15,821 Stage-1 map = 0%, reduce = 0%

2019-03-04 21:22:37,684 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 19.85 sec

2019-03-04 21:22:47,412 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 25.57 sec

MapReduce Total cumulative CPU time: 25 seconds 570 msec

Ended Job = job_1551550063156_0001

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1551550063156_0002, Tracking URL = http://hadoop001:8088/proxy/application_1551550063156_0002/

Kill Command = /home/hadoop/app/hadoop/bin/hadoop job -kill job_1551550063156_0002

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2019-03-04 21:23:03,025 Stage-2 map = 0%, reduce = 0%

2019-03-04 21:23:09,833 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.22 sec

2019-03-04 21:23:17,328 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 4.54 sec

MapReduce Total cumulative CPU time: 4 seconds 540 msec

Ended Job = job_1551550063156_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 25.57 sec HDFS Read: 6787 HDFS Write: 195 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 4.54 sec HDFS Read: 4679 HDFS Write: 35 SUCCESS

Total MapReduce CPU Time Spent: 30 seconds 110 msec

OK

hello 3

welcome 2

test 2

bigdata 2

Time taken: 146.683 seconds, Fetched: 4 row(s)

执行浅析

先来看一下sql

1

2

3

4

5select word, count(1) c

from

(select explode(split(sentence,'\t')) as word from test_wc) t

group by word

order by c desc;子查询

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15先单独执行一下子查询

select explode(split(sentence,'\t')) as word from test_wc

OK

welcome

bigdata

test

hello

bigdata

test

hello

hello

welcome

Time taken: 0.194 seconds, Fetched: 9 row(s)

这里我们调用explode对每一行进行了展开,同时调用了split按照tab进行数据分隔分类聚合

1

2

3

4

5

6select word, count(1) c

from t

group by word

order by c desc;

子查询搞清楚之后,这里就是按照SQL的写法,调用了group对单词进行了一个分类注意点

1

2

3

4- 看日志可以看到,整个过程是经过两个mapreduce才完成了整个步骤

- 但是我们单独执行select explode(split(sentence,'\t')) as word from test_wc的时候却一次mapreduce过程都没有

- 待解答